Nvidia Introduces Blackwell B200 Chip and GB200 ‘Superchip’ at GTC

During Nvidia’s annual GTC conference at the San Jose Convention Center this week, the company introduced its most potent single-chip GPU, the Blackwell B200 tensor core chip. With 208 billion transistors, Nvidia claims it can reduce AI inference operating costs, such as running ChatGPT, and energy consumption by up to 25 times compared to the H100.

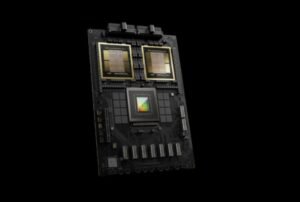

Additionally, Nvidia unveiled the GB200, a “superchip” comprising two B200 chips and a Grace CPU for enhanced performance.

In his keynote address on Monday afternoon, Nvidia CEO Jensen Huang emphasized the need for larger GPUs. He stated that the Blackwell platform would enable the training of trillion-parameter AI models, surpassing the capabilities of today’s generative AI models. For perspective, OpenAI’s GPT-3, introduced in 2020, had 175 billion parameters, illustrating the increasing complexity of AI models.

Nvidia’s Blackwell Architecture

The Blackwell architecture from Nvidia pays homage to David Harold Blackwell, a pioneering mathematician renowned for his work in game theory and statistics. As the first Black scholar to be inducted into the National Academy of Sciences, Blackwell’s legacy is honored through this platform.

This architecture introduces six cutting-edge technologies for accelerated computing. These encompass a second-generation

Transformer Engine, fifth-generation NVLink, RAS Engine, secure AI functionalities, and a decompression engine optimized for expediting database queries.

Numerous prominent organizations, including Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI, are anticipated to adopt the Blackwell platform. Nvidia’s press release features endorsements from key tech CEOs, such as Mark Zuckerberg and Sam Altman, praising the platform’s capabilities.

Nvidia’s Evolution from Gaming to AI Dominance

Initially developed primarily for gaming acceleration, GPUs have proven highly effective for AI tasks due to their parallel architecture, which expedites the multitude of matrix multiplication operations essential for running modern neural networks. As new deep learning architectures emerged in the 2010s, Nvidia seized the opportunity to create specialized GPUs tailored specifically for accelerating AI models.

Nvidia’s emphasis on the data center segment has significantly boosted its wealth and market value, and the introduction of these new chips perpetuates this trend. While Nvidia’s gaming GPU revenue stood at $2.9 billion in the last quarter, it pales in comparison to the data center revenue, which reached $18.4 billion. This disparity underscores the continued dominance of Nvidia’s data center business.

The Grace Blackwell GB200 chip mentioned earlier is integral to the new NVIDIA GB200 NVL72, a liquid-cooled data center computer system tailored for AI training and inference tasks. This system combines 36 GB200s, comprising a total of 72 B200 GPUs and 36 Grace CPUs. The chips are interconnected using fifth-generation NVLink technology, which significantly enhances performance by linking them together.

Nvidia’s GB200 NVL72: Revolutionizing AI Performance

“Nvidia stated that the GB200 NVL72 offers up to a 30x performance boost compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, along with reducing costs and energy consumption by up to 25x.

Such a substantial speed increase could potentially yield significant savings in both money and time when running today’s AI models. Moreover, it opens doors for the development of more intricate AI models. Generative AI models, such as those powering Google Gemini and AI image generators, are notorious for their high computational demands. Shortages in computing power have often been cited as a hindrance to progress and research in the AI field, prompting figures like OpenAI CEO Sam Altman to pursue deals to establish new chip foundries.

While Nvidia’s claims regarding the capabilities of the Blackwell platform are impressive, its real-world performance and adoption remain to be seen as organizations begin to integrate and utilize the technology themselves. Competitors like Intel and AMD are also vying for a share of Nvidia’s AI market.

Nvidia has announced that Blackwell-based products will be available from various partners later this year.”

Comments 3