Intel Unveils Gaudi 3: Powering Next-Gen AI Systems

On Tuesday, Intel introduced its newest artificial intelligence chip, dubbed Gaudi 3, in response to the urgent demand for semiconductors capable of training and deploying large AI models, including those powering systems like OpenAI’s ChatGPT.

According to Intel, the Gaudi 3 chip boasts over twice the power efficiency and can execute AI models one-and-a-half times faster compared to Nvidia’s H100 GPU. Additionally, it offers various configurations, such as a package containing eight Gaudi 3 chips on a single motherboard or a card designed to integrate into existing systems. It is also worth noting that Intel’s launch comes only a few weeks after the launch of Nvidia’s AI chip, Blackwell B 300 – implying emerging competitive revalries in the AI domain.

The #IntelGaudi 3 #AI accelerator offers a highly competitive alternative to NVIDIA’s H100 with higher performance, increased scalability, and PyTorch integration. Explore more key product benefits. https://t.co/sXdQKjYFw0 pic.twitter.com/iJSndBQkvT

— Intel AI (@IntelAI) April 9, 2024

Intel announced that the new Gaudi 3 chips will be accessible to customers in the third quarter, with companies such as Dell, Hewlett Packard Enterprise, and Supermicro set to develop systems incorporating these chips. However, Intel did not disclose a pricing range for the Gaudi 3.

AI Market Expansion Fuels Competition

Das Kamhout, Intel’s Vice President of Xeon software, stated during a call with reporters, “We do expect it to be highly competitive” with Nvidia’s latest chips. He emphasized the competitiveness of Intel’s pricing, as well as the unique features like the open integrated network on chip utilizing industry-standard Ethernet, asserting that it constitutes a robust offering.

The growth of the data center AI market is expected to surge as cloud providers and businesses expand their infrastructure to accommodate AI software deployment, indicating potential opportunities for other competitors even as Nvidia maintains a significant share of AI chip production.

The expenses associated with running generative AI models and procuring Nvidia GPUs have led companies to seek alternative suppliers to mitigate costs.

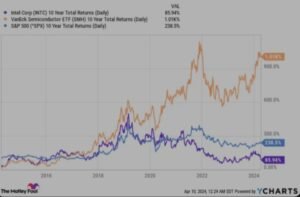

AI Stocks Surge: Nvidia Leads, Intel Lags Behind

This new chip launch by Intel comes after the AI surge has propelled Nvidia’s stock by over threefold in the past year. In contrast, Intel’s stock has seen a more modest increase of 18% during the same period.

AMD is also aiming to broaden its market presence by offering more AI chips for servers. Last year, it unveiled a new data center GPU known as the MI300X, which has already attracted customers such as Meta and Microsoft.

In recent months, Nvidia unveiled its B100 and B200 GPUs, succeeding the H100, and touting performance improvements. These chips are slated to commence shipping later this year.

Intel’s Open-Source Approach and Future Manufacturing Plans

Nvidia’s remarkable success can be attributed in part to its robust suite of proprietary software known as CUDA, which grants AI researchers access to all hardware features within a GPU. In contrast, Intel is collaborating with other industry leaders, such as Google, Qualcomm, and Arm, to develop open-source software that isn’t proprietary. This initiative aims to facilitate seamless transitions for software companies between different chip providers.

“We are collaborating with the software ecosystem to develop open reference software and modular building blocks, empowering users to construct tailored solutions rather than being constrained by pre-packaged offerings,” remarked Sachin Katti, senior vice president of Intel’s networking group, during a call with reporters.

Gaudi 3 utilizes a cutting-edge five-nanometer manufacturing process, indicating that Intel is likely leveraging an external foundry for chip production. In addition to designing Gaudi 3, Intel intends to manufacture AI chips, potentially for external clients, at a new facility in Ohio slated to commence operations in 2027 or 2028, as disclosed by CEO Patrick Gelsinger in discussions with reportersl ast month.